SDR transmit and clean signals

If you have a transmit capable SDR, you may have heard that you need to filter its output, before transmitting to the world. Certainly before amplifying the signal.

I have a TinySA Ultra spectrum analyzer, and will here show you some screenshots about just how true that is.

I tested this with my USRP B200, transmitting a pure carrier around 145MHz and 435MHz.

Oh, and a word of caution: If you want to replicate this, make sure to add an inline attenuator, to not damage your spectrum analyzer. I used a cheap 40dB one, but adjusted the values in most of the graphs to show the real signal strength, as if I hadn’t. The TinySA keeps resetting the offset, so I missed it in two of the graphs (where noted, below), and can’t be bothered reconnecting everything.

tl;dr

- Harmonics can be almost as strong as the fundamental. You need to filter these.

- Transmitting at maximum output gain may cause lots of unwanted signals right around your fundamental. You cannot filter these. You need to not generate them.

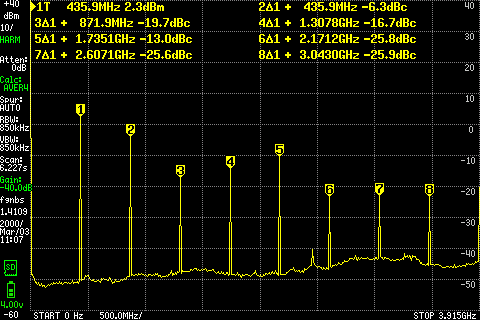

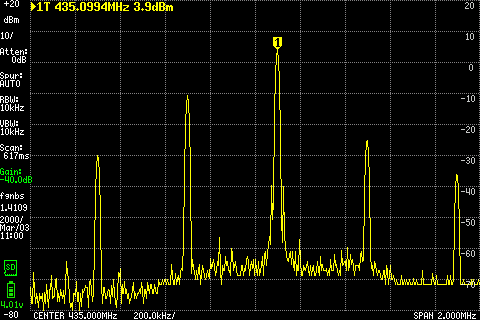

Harmonics

(this graph is

actually 40dB off, so it should be +7.5dBm for the fundamental, not

-32.5)

Reducing the output gain did not meaningfully fix the problem. The best I saw from using half output gain was to make the strongest harmonic 9dB less than the fundamental. That’s way too strong.

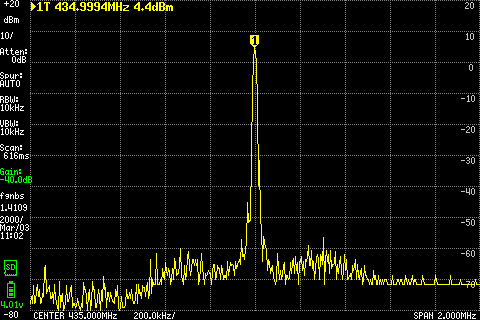

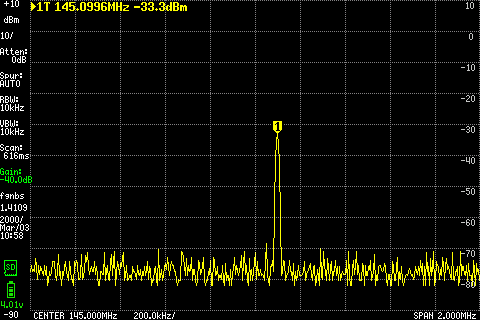

I added a cheap band pass filter (FBP-144), which made it all look great:

(also

this graph is 40dB off, so it should be +4.5dBm for the fundamental,

not -35.5)

I’ve been unable to find such a quick, easy, and cheap solution for the 440Mhz band.

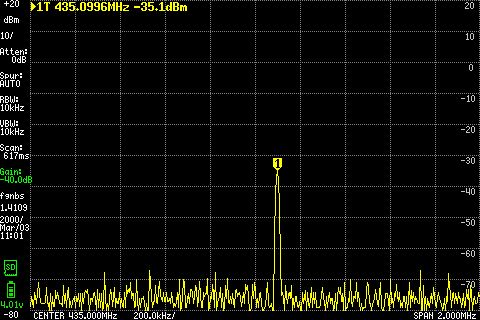

Too high an output gain

For my signal generator I set the Gain Type to Normalized, meaning

gain between 0.0 and 1.0. I started with full power.

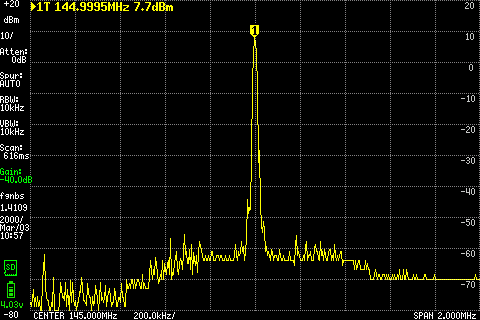

Zooming into the signal, everything looked mostly fine at full power:

Well, the noise floor looks uneven. Still, it’s low.

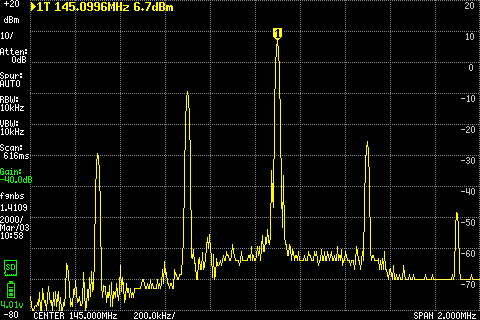

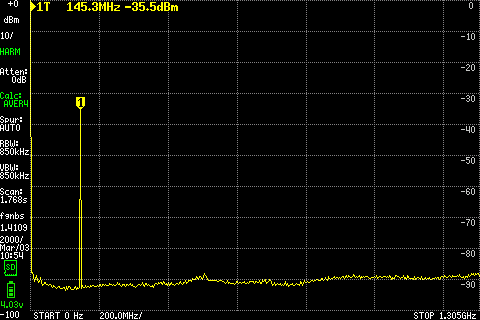

But now, while keeping the tuned frequency the same, I start generating a sine wave at 100kHz instead of 0Hz.

Yuck!

It’s the offset that triggers it. Sending a 0Hz signal at 145.1MHz directly is super clean.

Pulling down to half power fixed it. Specifically for me it disappeared at an output gain of 0.53 and lower.

So apparently it’s perfectly possible to generate a clean signal with full power exactly at the tuned frequency of my SDR, but not 100kHz off. So unless you’re doing morse code, this may mean you can’t actually get the “>10dBm” that the data sheet specifies.

Or maybe my B200 is a bit wonky?

Conclusion

- When you start planning a project that involves amplifying your SDR output, don’t assume you can start from the max power of your SDR.

- Adding a filter for harmonics isn’t just a good idea; it’s mandatory.